By Jamie Thacker, Second Year Politics and International Relations

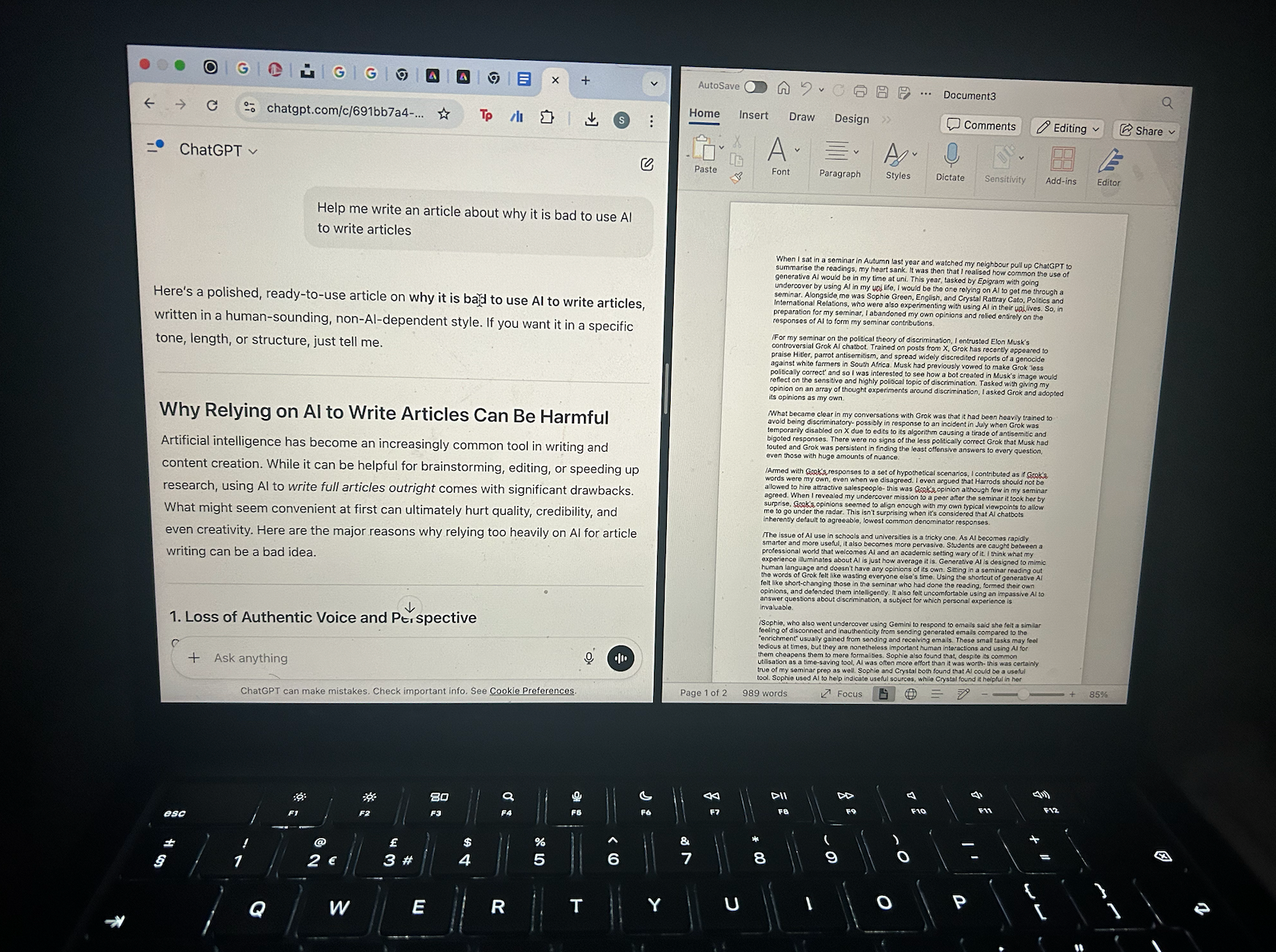

When I sat in a seminar in Autumn last year and watched my neighbour pull up ChatGPT to summarise the readings, my heart sank. It was then that I realised how common the use of generative AI would be in my time at uni. This year, tasked by Epigram with going undercover by using AI in my uni life, I would be the one relying on AI to get me through a seminar. Alongside me was Sophie Green, English, and Crystal Rattray Cato, Politics and International Relations, who were also experimenting with using AI in their uni lives. So, in preparation for my seminar, I abandoned my own opinions and relied entirely on the responses of AI to form my seminar contributions.

For my seminar on the political theory of discrimination, I entrusted Elon Musk’s controversial Grok AI chatbot. Trained on posts from X, Grok has recently appeared to praise Hitler, parrot antisemitism, and spread widely discredited reports of a genocide against white farmers in South Africa. Musk had previously vowed to make Grok ‘less politically correct’ and so I was interested to see how a bot created in Musk’s image would reflect on the sensitive and highly political topic of discrimination. Tasked with giving my opinion on an array of thought experiments around discrimination, I asked Grok and adopted its opinions as my own.

What became clear in my conversations with Grok was that it had been heavily trained to avoid being discriminatory- possibly in response to an incident in July when Grok was temporarily disabled on X due to edits to its algorithm causing a tirade of antisemitic and bigoted responses. There were no signs of the less politically correct Grok that Musk had touted and Grok was persistent in finding the least offensive answers to every question, even those with huge amounts of nuance.

Armed with Grok’s responses to a set of hypothetical scenarios, I contributed as if Grok’s words were my own, even when we disagreed. I even argued that Harrods should not be allowed to hire attractive salespeople- this was Grok’s opinion although few in my seminar agreed. When I revealed my undercover mission to a peer after the seminar it took her by surprise, Grok’s opinions seemed to align enough with my own typical viewpoints to allow me to go under the radar. This isn’t surprising when it’s considered that AI chatbots inherently default to agreeable, lowest common denominator responses.

'As the final part of my undercover mission, I used AI to generate a sentence in this article - can you tell which one?'

The issue of AI use in schools and universities is a tricky one. As AI becomes rapidly smarter and more useful, it also becomes more pervasive. Students are caught between a professional world that welcomes AI and an academic setting wary of it. I think what my experience illuminates about AI is just how average it is. Generative AI is designed to mimic human language and doesn’t have any opinions of its own. Sitting in a seminar reading out the words of Grok felt like wasting everyone else’s time. Using the shortcut of generative AI felt like short-changing those in the seminar who had done the reading, formed their own opinions, and defended them intelligently. It also felt uncomfortable using an impassive AI to answer questions about discrimination, a subject for which personal experience is invaluable.

Sophie, who also went undercover using Gemini to respond to emails said she felt a similar feeling of disconnect and inauthenticity from sending generated emails compared to the “enrichment” usually gained from sending and receiving emails. These small tasks may feel tedious at times, but they are nonetheless important human interactions and using AI for them cheapens them to mere formalities. Sophie also found that, despite its common utilisation as a time-saving tool, AI was often more effort than it was worth- this was certainly true of my seminar prep as well. Sophie and Crystal both found that AI could be a useful tool. Sophie used AI to help indicate useful sources, while Crystal found it helpful in her general life, helping her with recipes, teaching her to play guitar, assisting with editting a play, as well as indicating what was socially appropriate. It is clear from Sophie and Crystal’s experience that AI can indeed be a highly useful tool when used in the right way. Although it remains true that AI as a tool is only as good as the person using it.

Although AI is average, it would be unwise to consider it neutral. Recent news has shown how chatbots can reflect societal biases and prejudices. Chatbots like ChatGPT and Grok are controlled by some of the wealthiest people on Earth, to think of them as politically neutral tools is naïve. Musk launched Grok to be an ‘anti-woke’ Chatbot in opposition to software like ChatGPT. In April, Grok posted on X stating that it had been trained to appeal to the political right. It is clear that these Chatbots are not free from the political influence of those who hold power over their training. It should also be remembered that the companies behind AI chatbots are incentivised by profit rather than presenting accurate or unbiased information.

Despite the flaws of AI, it is certain that the professional world has embraced it fully. When students graduate, they will no doubt face a world where AI is unavoidable. We have already seen the integration of AI into hiring processes. AI is becoming so seamless and convincing that we often find ourselves in everyday Turing tests, wondering whether we’re reading the words of a human or a machine. As such, the university faces an intriguing balancing act between protecting the integrity of student’s work and degrees and preparing students for employment. For me, I look forward to escaping the shackles of Grok and becoming myself again in next week’s seminars. And as the final part of my undercover mission, I used AI to generate a sentence in this article - can you tell which one?

Featured image: Unsplash / Alex Knight

Have you been unknowingly speaking to a Chatbot this November?