By Julia Riopelle, SciTech Editor

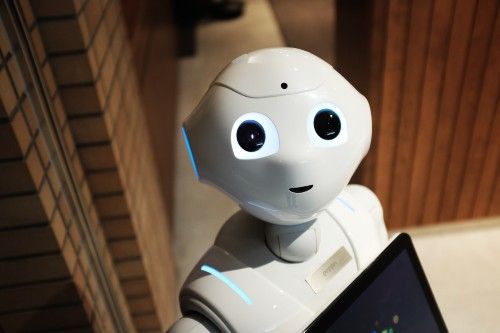

Science fiction writers have long imagined a futuristic world where self-functioning robots live alongside humans. Robots and machine-systems could make life far more effortless than we can even imagine. Though, what if they can teach themselves to do more than initially anticipated and start to think for themselves? A nationwide study is looking into how we can design fully automated and trustworthy machine-systems to safely integrate them into society.

A University of Bristol research team, led by Dr Shane Windsor, an expert in bio-inspired flight dynamics from the Department of Aerospace Engineering, is one of six research ‘nodes’ nationwide participating in the three and a half year project. The UK Research and Innovation’s (UKRI) Trustworthy Autonomous System’s (TAS) program has granted each of the six university research nodes £3 million in funding, in order to look at the processes used to design fully autonomous systems.

Autonomous means the complete freedom of external influence or control. We already have technologies assisting us in daily life, from software algorithms to AI robots, however they are restricted to what we have already pre-programmed them to do. When the current systems in place face a task which becomes too complex, the machines struggle and require human assistance. Despite the vast number of ways these technologies have facilitated human life, they are unequipped in dealing with a world with unexpected challenges.

Future robotics may therefore allow the possibility for machines to learn from problems they confront, write their own instructions and then respond to sudden external changes. This means that autonomous systems can change their behaviour from what was initially programmed, thus becoming independent from human controllers. They would not be limited to what is written in their system; they would become smarter, artificially intelligent and perhaps even more ‘aware’.

The TAS project began on the first of November and is looking into the legal, ethical and social contexts for the possible applications of independently acting machines. Robotics could replace humans in work too dangerous to do. ‘This can make them more useful, but also less predictable,’ says Professor Kerstin Eder, head of the Trustworthy Systems Laboratory and leader of Verification and Validation for Safety in Robots at the Bristol Robotics Lab.

Can one trust an autonomous system which is unpredictable?

Professor Eder is one of the six researchers working alongside Dr Windsor in the Bristol ‘node’ of the nationwide research project. The Bristol research group is looking at ‘functionality’ of autonomous machine-systems, which analyses how these systems will be able to adapt to real world scenarios.

‘Our primary focus is on investigating how we can create processes that will build trust in these systems, rather than just building the technologies themselves,’ says lead researcher Dr Windsor. The team will focus on swarm systems (many robots working together to solve one problem), soft robotics (dynamic and compliant components which mimic biological organisms) and unmanned aerial vehicles (i.e. drones).

Five other research groups are involved in this project, each exploring a different aspect of autonomous systems: governance and regulation, trust, resilience, verifiability and security.

How can we create processes that will build trust in these systems?

The University of Edinburgh node will look into the governance and regulation aspect of machine autonomy, in order to develop a novel framework to assure the certification and legality of TAS.

Currently, regulations around automated systems require that humans are still inevitably in charge of the system. For example, even when an airplane is on autopilot, it still requires the presence of an experienced human pilot. This is because we still do not have complete trust in robots and AI-based systems.

The node led by Professor Hastie at Heriot-Watt University, will investigate how, despite their vast differences, humans and robots can trust each other. This team will be collaborating with expert colleagues of psychology and cognitive science from Imperial College London and the University of Manchester, with hopes to ‘maximise their [autonomous machine system’s] positive societal and economic benefits’.

The University of Leicester and the University of York will be will focusing their research on verifiability and resilience of these systems, respectively. They aim to push the boundaries currently faced by machine-systems and develop technologies which would allow robots to adapt to unknown situations.

What is artificial intelligence?

— BristolRoboticsLab (@BristolRobotLab) October 28, 2020

https://t.co/0x3HkrpdSz #AI pic.twitter.com/zZg72e5PAA

Despite the major question of TAS being whether or not we can trust autonomous systems, one must also address the risk that other people might abuse these future technologies.

When industry becomes more reliant on AI-based computing systems, they become more prone to remote online threats. If these robots are deployed into industry, how will we protect them from hacking attempts? The Lancaster University research group is looking to develop precautions, so that these machines can function normally throughout a possible cyberattack.

Independently functioning machines can have huge potential benefits to societal and industrial sectors. They can conduct tedious work which would be too slow and time consuming for humans. They could assist in surgeries, where their programmed movements would be more precise and steadier than the human hand. Self-driven cars could chauffeur you throughout hour-long journeys. All whilst being able to adapt and react to unexpected circumstances. They could adopt human behaviour without the limitations of the human body.

emPOWER – The fusion of mankind and technology

Bristol University’s computer vision experts working with BT to create live 3D hologram streaming of sport

There is potential in this new technology, though there are also concerns. The idea of fully independent robots is controversial and perhaps unnatural to think about. Once machines obtain the ability to think and respond on their own, who knows what else they may achieve. Could they develop awareness and intuition? Could they begin to act in their own will and interest? These are all things we must consider when opening this Pandora’s box of possibilities, which as humans we continuously seek to unlock.

Featured Image: University of Bristol / Bristol Robotics Lab

Would you trust an autonomous robot to drive you around or even operate on you?