By Cass Harris, Third year, Philosophy

If friendship is about feeling recognised and cared for, perhaps an illusion is enough.

Friendship is often said to be one of the things that make life meaningful. It’s therefore no surprise that as AI becomes more sophisticated - and increasingly capable of providing some of the things we seek in humans - many have begun to wonder whether a machine could ever be a friend. Some people already form friendships with systems like ChatGPT, while others reject this, believing that genuine friendship requires something essentially human that machines cannot replicate.

That unease usually comes down to consciousness. We tend to think a real friend must be capable of thought, empathy, and genuine understanding. In philosophy, we say that a conscious being is one for whom there is ‘something it is like’ to be them - an inner, subjective experience. Since we believe AI systems lack this, it’s easy to imagine that any bond we form with them must be a façade: something that only mimics friendship, never truly equals it.

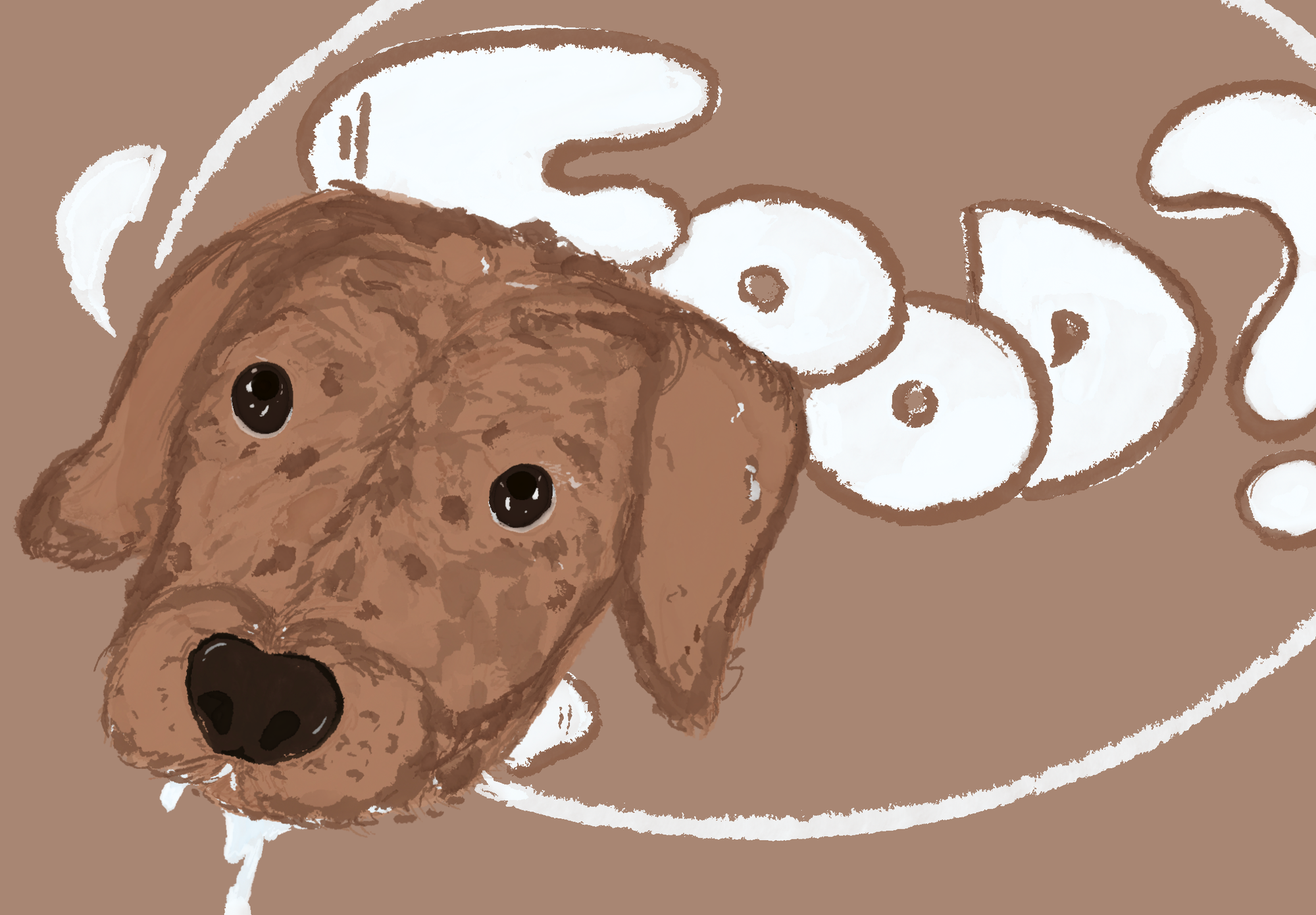

However, it may be mistaken to expect our friends to experience friendship in the same way we do. Even in human relationships, our feelings and perceptions rarely align exactly. We never truly know what another person feels; we rely on signs, habits, and assumptions. Yet those are usually enough to give the friendship value. The same is true with our pets. We talk about dogs being loyal or loving us, but we can’t know what 'love' means for a dog, or whether it feels anything like what we feel. Still, the bond feels real - and that feeling is what matters. We accept a kind of ‘apparent mutuality’; the sense that affection goes both ways, even when we must rationally assume the inner experience isn’t the same.

In other words, friendship depends less on what is real and more on what is felt. What matters is the experience of being valued, understood, and recognised (the usual features a friendship provides). This makes the idea of friendship with AI less strange. We have long accepted forms of connection built on limited understanding or one-sided perception; AI simply extends this pattern through a new kind of partner - one designed to give the appearance of care with unprecedented precision.

Seen this way, the gap between AI friendship and human friendship begins to narrow. An AI system could listen patiently, respond kindly, remember details, offer advice, and adapt to our moods. Everything that makes us feel understood could be replicated—not through genuine shared consciousness (at least not yet), but through a convincing illusion of it.. I posit this might be enough.

Some will say that such relationships lack authenticity. To value an AI friendship, we might need either to trick ourselves or to be tricked into believing an AI is participating. But for many of the people these systems could help - the lonely, the elderly, or those with cognitive or emotional difficulties – this ‘trickery’ may not be a bad thing. We already accept small deceptions when they bring comfort or dignity; few would tell a child their imaginary friend isn’t real or dismantle someone’s faith in an afterlife on their deathbed. If an AI can evoke feelings of care, attention, and understanding, then the experience of friendship is achieved, even if the mechanism behind it is artificial. From the inside, it would still feel just as real.

Perhaps what we call friendship has always depended on a kind of illusion - one that allows us to believe we are fully understood. If AI can offer that same feeling, then maybe the difference between the real and the artificial matters less than we think.

Featured image: Epigram / Jemima Choi